MLOps, or Machine Learning Ops, or Model Ops, is a set of practices for collaboration and communication between data scientists, data engineers, and DevOps professionals. Applying these practices increases the quality of solutions, simplifies the management process, and automates the deployment of machine learning and deep learning models in large-scale production environments. MLOps platform makes it easier to align the ml models with business needs as well as regulatory requirements.

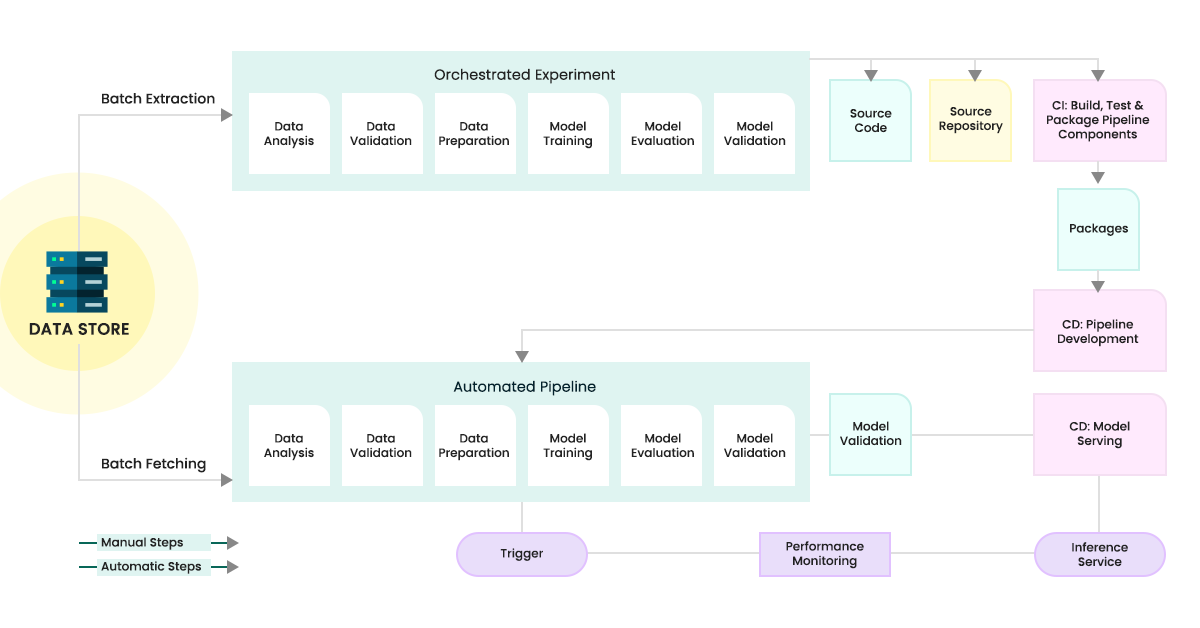

MLOps is slowly evolving into an independent approach to ML lifecycle management. It applies to the entire lifecycle – data gathering, model creation (software development lifecycle, continuous integration/continuous delivery), orchestration, deployment, health, diagnostics, governance, and business ROI validations. The following figure represents the end-to-end process of MLOps:

In our first article (XOps: The right approach for production business applications) in this series, we introduced XOps as the future for setting up and running production business applications at scale. Tredence’s XOps offering provides customers a combination of in-house experts, strong partnerships, and the right accelerators for each point in the production business process value chain. We also shared a thorough overview of BizOps, DevSecOps and DataOps.

In this article, we want to continue further down that train of thought in enterprise-grade production systems and dive deep into managing ML/AI innovations organization-wide. Critical ML-powered applications need to constantly be kept up to date to ensure they continue delivering relevant insights to business stakeholders or end consumers/customers. Furthermore, application and process security need to be preserved to ensure the confidentiality, integrity, accessibility, and regulatory compliance of the applications is maintained.

Why do we need MLOps?

Managing models in production is challenging. To optimize the value of machine learning, machine learning models must improve efficiency in business applications or support efforts to make better decisions as they run in production. MLOps best practices and technology enable businesses to deploy, manage, monitor, and govern ML.

Important data science practices are evolving to include more model management and operational functions, ensuring that models do not negatively impact business by producing erroneous results. Retraining models with updating data sets now includes automating that process, recognizing model drift, and alerting when it becomes significant—each aspect is equally vital. In addition, model performance relies on maintaining the underlying technology, MLOps platforms, and improving performance by recognizing when models demand upgrades.

This doesn’t mean that the work of data scientists is changing. It means that machine learning operations practices are eliminating data silos and broadening the team. In this way, data scientists can focus on building and deploying models rather than making platform engineering decisions that might be out of scope while empowering MLOps engineers to manage ML that is already in production.

Today, ML has a profound impact on a wide range of verticals such as financial services, telecommunications, healthcare, retail, education, and manufacturing. Within all of these sectors, ML is driving faster and better decisions in business-critical use cases, from marketing and sales to business intelligence, R&D, production, executive management, IT, and finance.

Major challenges while not adapting to MLOps as a practice include:

- Issues with Deployment: Businesses don’t realize the full benefits of AI because models are not deployed. Or, if they are deployed, it’s not at the speed or scale to meet the needs of the business.

- Issues with Machine Learning Monitoring: Evaluating machine learning model health manually is very time-consuming and takes resources away from model development.

- Issues with Lifecycle Management: Even if they can identify model decay, organizations cannot regularly update models in production because the process is resource-intensive. There are also concerns that manual code is brittle, and the potential for outages is high.

- Issues with Model Governance: Businesses need time-consuming and costly audit processes in order to ensure compliance as a result of varied deployment processes, modeling languages, and the lack of a centralized view of AI in production across an organization.

How can Tredence help enterprises implement MLOps?

- Tredence’s MLOps solutions give visibility into production model performance for different personas like data scientists, data engineers and business users. Reduced dependency on DS/DE teams for production support can expedite the root cause identification of production issues.

- Streamline model monitoring and model remediation processes to improve the overall efficiency of data science, data engineers, and support teams

- Automated notifications to enable efficient production support

Tredence’s monitoring tool is the hub that brings all these pieces together and helps you monitor all the automations put in place and notify the user regarding any issues. The tool is a one-stop dashboard for different personas and helps bridge the gap between different teams in a large-scale enterprise. The image below examines what can be covered with Tredence’s state-of-the-art MLOps practice:

| ML WORKS FEATURE | BENEFITS |

|---|---|

| Provenance Tracking | Pipeline provenance tracking allows quick identification and fix of issues |

| Monitoring | Persona-based metrics provide visibility to different ML personas like data scientists, data engineers, and business users |

| Data drift | Data drift monitoring with auto-trigger alerts for proactive model fixes |

| Explainability | Explainability-enabled understanding of the inner workings of the models |

| Data quality | Tracking input data quality and completeness allows proactive action in model refresh process |

| Production readiness | Standard code guidelines for data scientists speed up development and CI/CD processes |

| ML Testing Framework | Test various ML experiments on different criteria and make a carefully informed decision before choosing the model to deploy on production workloads |

Business impact of using machine learning best practices

The business impact of machine learning is both impressive and far-reaching:

- 15-20% of sales budget on promotional spends restructured for CPG firms to ensure higher ROI

- 95% drop in production downtime for companies

- Targeted campaigns to reduce customer churn for telecommunication companies

- Transparent screening process for insurers

- Accelerated rate of ML/AI innovations for customer-centric businesses

ML systems and dependencies have reached a point of exponential demand for intelligent production systems, and MLOps framework is the key to further human advancements in the technology sector.

In Conclusion

With the increasing use of ML in various industry sectors and the need for maintainable and scalable ML-powered applications, the adoption of the MLOps framework and Model Ops culture should become standard for all those who work with AI over the next few years. After all, MLOps has been proved essential for large-scale projects and its adoption has generated extensive benefits. Currently, there are several MLOps tools that help data science and engineering teams manage their projects, from open-source tools such as MLFlow, DVC, TFX and Kubeflow to cloud resources such as AWS SageMaker, Valohai, Algorithmia, DataRobot, and Neptune.ai. The list doesn’t end here and continuous updates, products, algorithms, and more emerge on a daily basis. If you want to understand how your business-critical processes can be set up and maintained more effectively and productively, contact Tredence or write to us at marketing@tredence.com.

AUTHOR - FOLLOW

Editorial Team

Tredence

Topic Tags