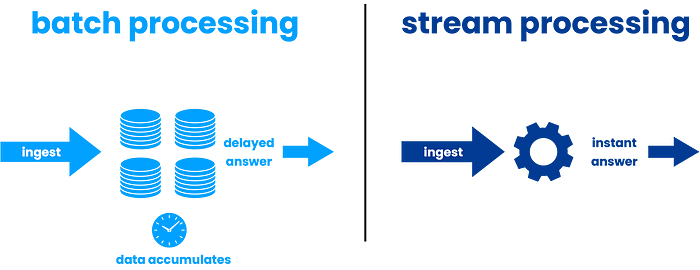

Real-time insights become more powerful when batch-processed data models are replaced with continuous streaming pipelines. But there are obstacles in the way of this movement. In this blog, we examine the main obstacles that batch-oriented systems face while attempting to adapt to the rapidly evolving world of streaming data and discuss potential solutions:

Challenges in Transitioning to Streaming Pipelines

- Data Aggregation and Pivoting: Batch processing excels at these tasks, allowing for calculations and transformations over complete datasets. In streaming pipelines, where data arrives continuously, it’s not always feasible to wait for the entire dataset before performing these operations.

- Streaming to Streaming Joins: Joining data sets from multiple streams can be intricate. Traditional batch joins, which rely on complete datasets being available, may not be suitable. Techniques like time-windowed joins or stateful computations are needed to achieve joins in a streaming environment.

- Inner Join Data Drops: Inner joins in streaming pipelines can lead to data loss. If a record from one stream arrives late (due to network delays or processing issues), it might miss its corresponding record in the other stream, resulting in dropped data.

- Streaming Bottlenecks: Processing real-time data can strain resources. Identifying bottlenecks through profiling and utilizing micro-batching techniques, which process data in smaller chunks, can help alleviate pressure on the system.

- Increased Cost in Production: While streaming eliminates batch processing jobs, it introduces constant data processing costs. Cloud-based solutions with pay-as-you-go models and autoscaling capabilities can help optimize costs.

- Late Arriving Dimensional Data: Stream processing often deals with data arriving out of order, particularly for dimensional data like customer information or product details. This mismatch can create difficulties in enriching streaming data with the appropriate contextual information.

Overcoming Obstacles in Streaming Pipeline Transition

- Micro-Batching: This technique groups incoming data into small batches over short time windows, allowing for aggregation and pivoting within those batches. This balances processing efficiency with real-time responsiveness.

- Pivoting: Pivoting can be achieved by breaking the data into smaller dataframes and merging them into a single desired column. However for large datasets this might lead to bottlenecks in the stream.

- Stateful Stream Processing: Frameworks like Apache Flink and Apache Spark Streaming allow maintaining state information across processing windows. This enables joins on related data streams even if records arrive at different times.

- Outer Joins and Data Deduplication: Consider using outer joins in your streaming pipelines to capture all relevant data, even if the corresponding record from another stream is missing. Additionally, employing deduplication techniques can ensure you don’t process the same record multiple times.

- Early Loading and Late Binding: Strategies like early loading dimensional data into a separate stream or utilizing techniques like late binding can help address the challenge of delayed arrival times. Here, records can be enriched with the appropriate dimension data when it becomes available.

- Materialized Views: Pre-computing aggregations and storing them as materialized views can significantly improve response times for frequent queries in streaming pipelines.

- Microbatching: As mentioned earlier, microbatching breaks down continuous data streams into smaller, manageable chunks, allowing for aggregation and joins similar to batch processing while maintaining near real-time capabilities.

- Kappa Architecture: This architecture embraces the idea of potentially processing some data multiple times. It allows for initial processing in a streaming pipeline with the option to later reprocess the data in a batch layer for more complex analysis.

- Lambda Architecture: This architecture combines a real-time processing layer (speed layer) with a batch processing layer (batch layer). The speed layer handles time-sensitive data, while the batch layer ensures data consistency and enables more complex analysis on the entire dataset.

Key Factors for Successful Streaming Pipeline Implementation

- Windowing and State Management: Choosing the appropriate window size for micro-batching and state management is crucial. Smaller windows provide near real-time insights but increase processing overhead. Larger windows are more efficient but delay insights.

- Error Handling and Idempotence: Streaming pipelines can encounter errors due to network issues or processing failures. Implementing robust error handling mechanisms and ensuring idempotent operations (guaranteeing an action is only performed once, even on retries) is vital.

- Monitoring and Optimization: Regularly monitoring your streaming pipeline performance and resource utilization allows for continuous optimization. Adapt window sizes, state management strategies, and error handling approaches as needed to ensure efficient and reliable data processing.

Conclusion:

Gaining important real-time insights is possible by switching from batch processing to streaming pipelines. You can guarantee a seamless transition by recognizing the difficulties with data aggregation, joins, late-arriving data, and possible data loss, and putting suitable solutions like micro-batching, stateful processing, and windowing techniques into practice. Keep in mind that sustaining a dependable and effective streaming data pipeline requires constant optimization and monitoring. Accept the flow and discover how real-time data may help you make wise decisions!

AUTHOR - FOLLOW

Ankit Adak

Analyst

Topic Tags

Next Topic

Exploring the Impact of Generative AI in Supply Chain Planning: A Comprehensive Guide

Next Topic