Multi-label Document Tagging: Intelligent Audio Transcript Analytics Solution Strategically Managing and Sorting Important Texts for Scaling Industrial NLP Applications

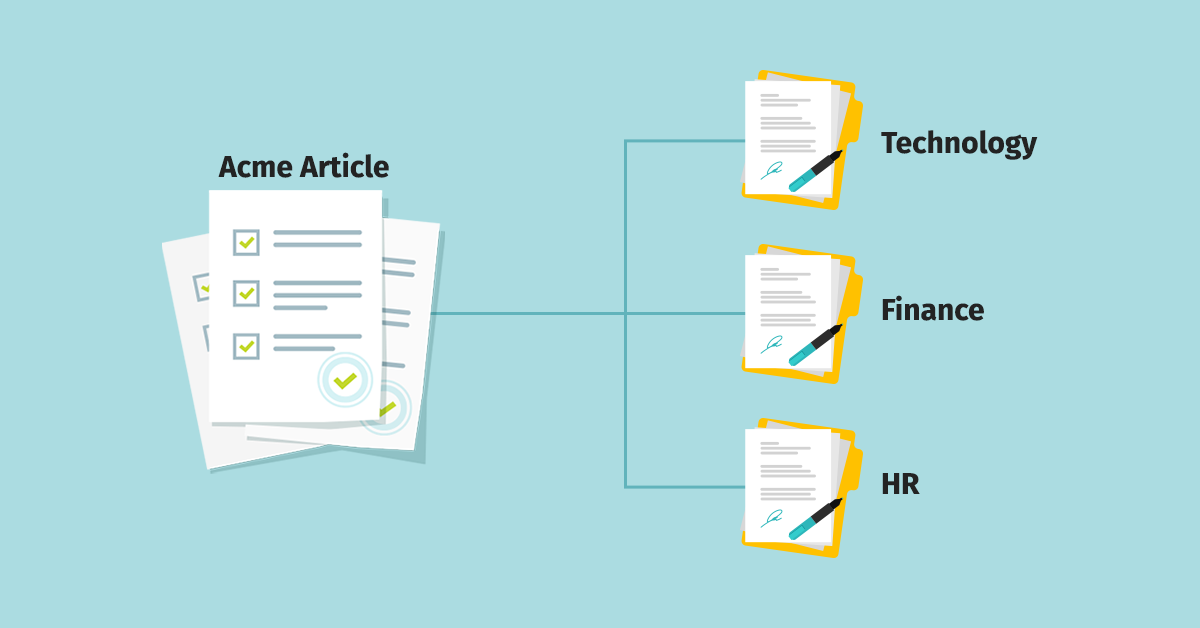

This blog is the last in the 4-blog series that explores the multi-label document tagging method. The specific problem statement is to label these transcript documents into client-defined categories, which will be further used in automatic routing, identification of category overlaps, etc.

Critical Challenge Associated With Multi-label Document Tagging

Single label Ground Truth: Although the problem statement is to tag multiple labels, we have a single label tagged by experts, making it challenging to apply Supervised Learning algorithms and evaluate other predicted labels.

A More Detailed Look at the Problems of the Traditional Approaches

Most of the Document Tagging problems you may have encountered use a supervised approach that formulates the problem as a multi-class/label text classification. In our case, this approach has several drawbacks. First up, we don’t have multi-label tagging available for our transcripts. Instead, we have one. Second, which is critical from a scaling point of view, is that we’re developing solutions for more than 10 categories, but eventually, the scalable deployed solution should run for approximately 200 categories. Also, supervised learning will pose numerous problems when classifying with those multiple categories.

Our Scientific Approach

Our analysts have written several research documents for each of these categories. We use this knowledge to label the transcripts. Let’s give you a sneak peek into our high-level approach.

- Vocabulary Creation: Create a vocabulary corpus for each category using the research and supporting documents. Business knowledge is then incorporated to understand which section of documents is specific to that category versus talking about the general area.

- Feature Selection: This is a widely undervalued area in text applications. Most of the resources available out there jump into text representations directly using thousands of features hoping that keeping more features will cover the representation well. Text feature representation libraries in Python like scikit-learn also take top features based on corpus vocabulary. But, we’ve seen that using discriminatory feature selection techniques will remove the noisy features and reduce the feature set without having information loss.

- Feature Normalization: Are ‘apps’ and ‘applications’ the same? Are ‘leader’ and ‘leaders’ the same? If your answer is assertive, this step is crucial in further reducing the noise. Linguistic-based techniques range from stemming/lemmatization to language model techniques like word embedding similarity to achieve this normalization.

- Text Vectorized Representation: Once we have the final features, we have several methods to represent text -Bag of Words, Binary, TF-IDF, and Embeddings. A general note of advice is first testing the system with standard representations and identifying cases where embeddings can help and then use those effectively.In our scenario, the category represented documents are well-written texts and the transcripts are conversational texts. Hence, there can be a lot of slang/lingo terms that we might want to match. For example: ‘apps’ with ‘applications,’ ‘ai’ with ‘artificial intelligence,’ ‘ceos’ with ‘c-suite’ and so on. We have fine-tuned a universal word embedding with a domain-trained corpus and created a client-specific language model to achieve this. This embedding model can be used in several ways to represent text, namely – an average of features, IDF weighted average of features, etc.

- Similarity Matching: Once we have represented the categories in vectors using the above methods, we transform the transcripts to the same dimensional space. We then use the cosine similarity to identify the nearest categories for each of the transcript documents.

- Dynamic Tagging: Instead of providing the top three labels based on similarity, we use a differential cut-off to identify the number of labels dynamically ranging from 1 to 3.

Why Is Our Methodology Robust?

Our approach is not Supervised. Hence, neither depends on experts’ annotation nor on having a high sample size for each category. Our scientific feature selection process selects features that add value to the representation. Hence, it’s easily scalable for more categories. Our custom language model representation techniques take care of several features considered similarly and eliminate transcription inaccuracies. We have several methodologies in the pipeline like hierarchical categorization to scale this approach to over 200 categories We have achieved a human level Precision in multi-labeling using linguistic rules, domain trained BERT language models, and several business considerations. We believe that such innovative approaches need pilot testing and build large-scale industrial NLP solutions. And, we’re making a step every day in that direction.

Topic Tags

Next Topic

Intent Recognition Using Semantic Analysis for Audio Transcript Analytics

Next Topic