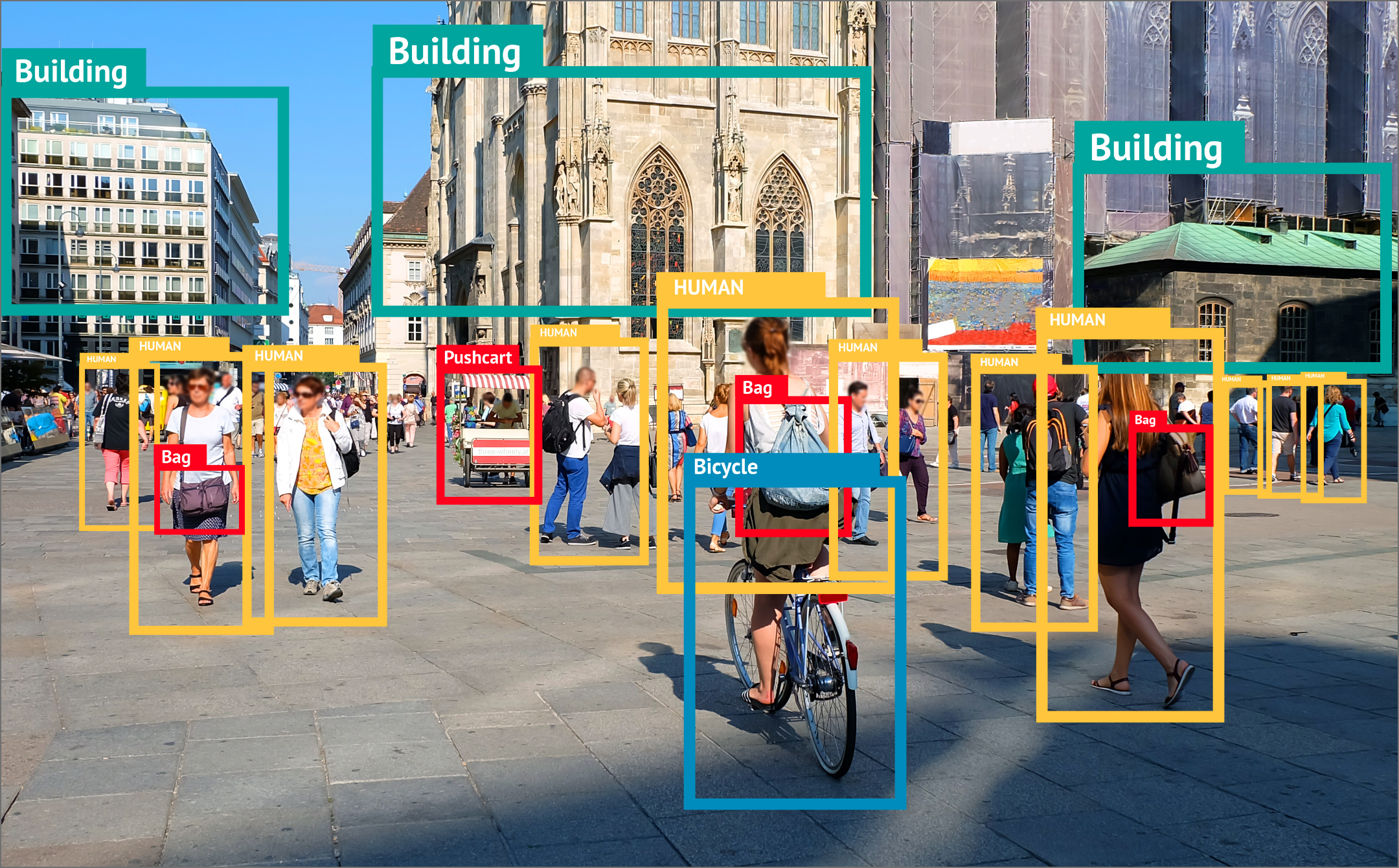

Accurate object detection is key to countless real-world applications, from self-driving cars to medical image analysis. The journey towards today's sophisticated systems began with R-CNN.

R-CNN played a groundbreaking role in the field of object detection, introducing several innovations that paved the way for modern, highly accurate object detectors. It introduced a modular pipeline with separate stages for proposal generation, feature extraction, and classification. While later models moved towards unified training, this stage-wise approach provided a foundation for understanding the breakdown of the object detection process.

Let’s walk through some of the key ideas and understand the inner workings of the R-CNN family of detectors.

Object Detection before R-CNN

Before R-CNN, object detection primarily relied on meticulously designed detectors to scan every possible location and scale in an image. Features such as HOG or SIFT, manually designed to capture shape and texture, were used to identify objects. These approaches were computationally expensive as they had to search the whole image at different locations.

Viola-Jones Detector

| The Viola-Jones algorithm is a classic object detection method famed for its speed and ability to achieve real-time face detection in the early 2000s. Its core innovation lies in the use of Haar-like features. These simple rectangular features capture differences in brightness and can be computed with extreme efficiency using an integral image representation. The algorithm trains classifiers using the AdaBoost algorithm to select the most discriminative Haar-like features. |

Figure 1: Haar-like Cascades |

HOG Detector

The HOG detector was a popular method for object detection that aimed to capture the overall shape and appearance of objects based on the distribution of local intensity gradients (edges) within an image. The HOG detector, often combined with a sliding window approach, was a significant tool for object detection tasks, especially pedestrian detection, before the dominance of deep learning methods.

Deformable Part-Based Model

DPMs were a significant advancement in pre-deep learning object detection. They aimed to model objects as flexible combinations of parts to better handle deformations and occlusions compared to rigid object detectors.

The flexible arrangement of parts allowed DPMs to capture moderate pose variations and partial occlusions. The part-based approach provided some intuition on which parts of the model helped with the detection, making them more interpretable. However, since DPMs still use HOG features, their representational power is limited compared to learned features in deep learning. Also, the optimization for finding the best part configuration was computationally demanding.

R-CNN Architecture

The seminal R-CNN paper, "Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation," describes RCNN in the following manner:

| Regions with CNN Features (RCNN) model uses high-capacity CNNs to bottom-up region proposals in order to localize and segment objects. It uses selective search to identify a number of bounding-box object region candidates (“regions of interest”) and then extracts features from each region independently for classification. |

To understand this better, let’s break down the problem it’s trying to solve and see how it addresses each piece individually. The main challenge of object detection is two-fold:

- Where: Locating objects and their bounding boxes.

- What: Identifying the class of the object (e.g., “cat,” “car,” “cycle,” etc.)

R-CNN tackles these problems using a sequence of different techniques.

Region Proposal: Selective Search

| To address the “where” side of the problem, we would want to search for “interesting” regions within the image where we might find some of the objects we are trying to detect. To this end, R-CNN leverages Selective Search. |

Selective Search aims to group similar regions, starting from a fine-grained over-segmentation and progressing hierarchically. The process can be described in the following steps:

Step I: Initial Over-segmentation

The image is divided into many small, homogeneous regions. This is usually based on pixel similarities involving color, texture, intensity, etc.

Step II: Hierarchical Merging

- Similarity Measures: Selective Search uses a combination of similarity metrics:

- Color similarity (color histograms)

- Texture similarity (gradients)

- Size similarity (favors smaller regions merging first)

- Shape Compatibility (how well regions fit together when combined)

- Greedy Combination: Starting with the most similar region pairs, Selective Search iteratively merges them. After each merge, similarities are recalculated with the newly formed, larger region.

Step III: Generating Proposals:

As the merging progresses, the remaining regions (usually around 1000-2000) are considered the region proposals.

Selective Search is much faster than exhaustive sliding window methods (used for traditional object detection tasks). It also provides proposals at varying scales, locations, and shapes. This helps the detector handle different object types and has a high recall, meaning it's good at proposing regions that contain objects, even if it suggests some extra regions, too.

Categorizing Regions

Now that we have some candidate regions to investigate, we can shift our focus to what is in these regions. Do these regions contain any of the categories we are searching for? For this, R-CNN proposes a two-step process.

Feature Extraction

Pre-trained CNN: R-CNN takes a pre-trained CNN (like AlexNet) that is already good at classifying images.

Extract Features: Each proposed region is cropped, resized, and fed into the CNN. The CNN is a powerful feature extractor, creating a rich representation of what's inside that region.

Classification

R-CNN trains a separate Support Vector Machine (SVM) classifier for each object class you want to detect. These SVMs take the CNN's extracted features and tell you the probability of the region containing that object (e.g., one SVM for "car," another for "person," etc.).

So, when we obtain features for each bounding box, we pass them through each SVM to get different scores across all categories.

Bounding Box Regression

The paper finally proposes one last step to refine the output: introducing a bounding-box regressor. This regressor takes the region's features and fine-tunes the location of the initially proposed bounding box to better fit the object.

Finally, R-CNN has produced a list of bounding boxes. Each box has:

- An object category label (e.g., "person")

- A confidence score from the SVM

- Refined bounding box coordinates.

Limitations

While R-CNN was a breakthrough in object detection, it had certain limitations that paved the way for further improvements:

Slow Speed:

R-CNN is considered notoriously slow due to several factors:

- Multiple Stages: Processing each image involved running Selective Search, extracting CNN features for thousands of proposals, and then classifying them separately.

- Redundant Computations: CNN feature extraction happened independently on each region proposal; even overlapping regions shared a lot of computation.

- Disjointed Training Pipeline: Training R-CNN is a multi-stage process:

- Pre-training a CNN on a large image classification task.

- Fine-tuning the CNN on region proposals generated for object detection.

- Training SVMs separately.

- Finally, training the bounding box regressors. This fragmented process made it complex to optimize.

Selective Search Bottleneck

R-CNN relied on Selective Search for region proposals. While efficient, Selective Search became a bottleneck for overall speed, and its parameters could affect detection performance.

Fast R-CNN

Fast R-CNN was a significant speed improvement over R-CNN, addressing major computational inefficiencies. It introduced the idea of sharing CNN feature computation and replaced SVMs with a single neural network for classification and bounding box regression, streamlining the process and boosting speed.

Fast R-CNN aimed to fix the major efficiency issues of R-CNN. The procedure introduced was as follows:

- Single Feature Map: Instead of extracting features from each region proposal separately, Fast R-CNN runs the CNN on the entire input image once and generates a feature map.

- ROI Pooling: A new layer called ROI Pooling takes the region proposals and extracts fixed-size feature vectors from the shared feature map for each proposal. This avoids redundant feature computation. [Diagram of ROI Pooling with region proposals mapped onto feature map]

- Multi-task Training: Fast R-CNN combines classification and bounding box regression into a single neural network with multi-task loss, eliminating separate training stages.

Limitations:

- Dependence on Separate Proposal Method: Fast R-CNN still used Selective Search for region proposals, which remained a computational bottleneck.

- Potential for Further Optimization: While significantly improved, the architecture might not be fully optimized for real-time object detection.

Faster R-CNN

The most significant leap forward came with Faster R-CNN, where it introduced the Region Proposal Network (RPN). The RPN is a small network that directly learns to predict object proposals, finally removing the dependency on Selective Search and enabling near real-time object detection.

- Region Proposal Network (RPN): The core innovation in Faster R-CNN. The RPN is a small neural network that slides over the convolutional feature map from the backbone network, predicting object proposals (or "anchors") at various scales and aspect ratios.

- Speed: The RPN shares convolutional computations with the leading detection network, making proposal generation much faster than Selective Search.

- Unified Model: Faster R-CNN integrates the RPN, the feature extractor, and the classification/bounding box regression branches into a single, end-to-end trainable model. This reduces complexity and allows for joint optimization.

Limitations

Two-Stage Process: While faster than R-CNN and Fast R-CNN, Faster R-CNN is still a two-stage detector (proposals + classification), which can limit its real-time capability.

RPN Hyperparameters: The performance of the RPN can be sensitive to the choice of anchors and other hyperparameters.

Mask R-CNN

While Faster R-CNN excels at drawing boxes around objects, Mask R-CNN determines the exact outline of each object within those boxes, providing incredibly fine-grained information. A parallel branch (alongside those for classification and bounding box regression) specifically for predicting pixel-level segmentation masks for each detected object instance.

Mask R-CNN builds upon the core architecture of Faster R-CNN, extending its capabilities beyond bounding box detection. Its key feature is the addition of a mask branch:.

- Mask Branch: A parallel branch alongside existing classification and bounding box branches, specifically trained to predict pixel-level segmentation masks for each detected object instance.

- Beyond Bounding Boxes: While Faster R-CNN excels at drawing boxes around objects, Mask R-CNN determines the precise outline of each object within those boxes. This provides highly detailed object information. [Image comparing simple bounding box output vs. Mask R-CNN predicted masks]

FasterRCNN with a Retail Use-Case

Ensuring shelves are fully stocked is paramount to customer satisfaction and sales success. By leveraging Object Detection technologies, we can automatically recognize and track products on shelves; object detection algorithms enable real-time monitoring of stock levels, identification of empty spaces or misplaced items, and even prediction of future demand based on sales patterns. This automation streamlines the restocking process, reduces labor costs, and minimizes the likelihood of empty shelves, ultimately enhancing the shopping experience and boosting revenue for retailers.

We use a CPG dataset consisting of shelves stocked with various cold drinks belonging to six brands. For this use case, we will work with Faster-RCNN. The model, as discussed earlier, consists of several stages. For tuning the model, we can keep the initial convolutional layers and RPN layers intact while replacing the predictor layer to accommodate our six classes for the six brands that our dataset has. This allows us to train the model using a few examples as the feature extractors and RPN layers are pre-trained.

|

Figure 3: Store Shelf |

Figure 2: Annotated by FasterRCNN model |

Beyond RCNN

While the R-CNN family of models revolutionized object detection by introducing region proposals and convolutional neural networks, newer models like YOLO and SSD have largely taken over in practical applications. The main reason for this shift is their focus on speed and efficiency, making them better suited for real-time processing. We can observe the benefits and differences among these model families in the following table:

| Feature | R-CNN Family | YOLO Family | SSD Family |

| Architecture Type | Two-stage | Single-stage | Single-stage |

| Region Proposals | Region Proposal Network (RPN) | Divides the image into grid cells | Default boxes at multiple scales |

| Speed | Generally slower | Fastest | Balance between speed and accuracy |

| Accuracy | Typically highest | Good, but struggles with smaller objects | Between R-CNN and YOLO |

| Main Strength | High accuracy | Real-time speed | Compromise between accuracy and speed |

| Ideal Use Case | Prioritizing accuracy | Applications requiring real-time processing | Scenarios needing both accuracy and reasonable speed |

Conclusion

While surpassed in speed by modern single-stage detectors like YOLO and SSD, the R-CNN family of models lays a crucial foundation for the field of object detection. The introduction of region proposals significantly streamlined the detection process compared to exhaustive sliding window approaches. Harnessing the power of convolutional neural networks for feature representation in object detection also paved the way for the dramatic accuracy improvements seen in today's systems.

Though refined for efficiency, the staged training approach of these models shaped the understanding of the object detection pipeline. Even as architectures evolved, with Faster R-CNN integrating proposal generation and Mask R-CNN extending capabilities to instance segmentation, the core principles established by R-CNN remained influential.

Gain clarity on single-stage object detection techniques with our deep dive! Explore expert-led data science services designed to enhance your projects. Reach out for expert advice today!

AUTHOR - FOLLOW

Amey Patil

Data Scientist Consultant

Topic Tags